AI became our after-hours doctor, now it’s trying to earn that trust

Over 40 million Americans ask ChatGPT health questions every week. Globally, that number hits 230 million. And 60% of U.S. adults have used AI for health or healthcare in the past three months.

The appeal is clear: AI doesn’t require appointments, doesn’t judge midnight symptom spirals, and explains confusing lab results in plain English. Most health conversations happen outside clinic hours—when people can’t reach their doctors.

But we also know AI hallucinates, gives outdated advice, and doesn’t know your medical history. People use it when anxious and least equipped to evaluate accuracy. And despite the numbers, 60% of Americans say they’d be uncomfortable if their doctor relied on AI for care.

That gap between use and trust is what OpenAI, Amazon, and Anthropic are trying to close. Since January 2026, all three have launched dedicated health AI tools with better privacy, personalization, and clinical guardrails.

So what’s new

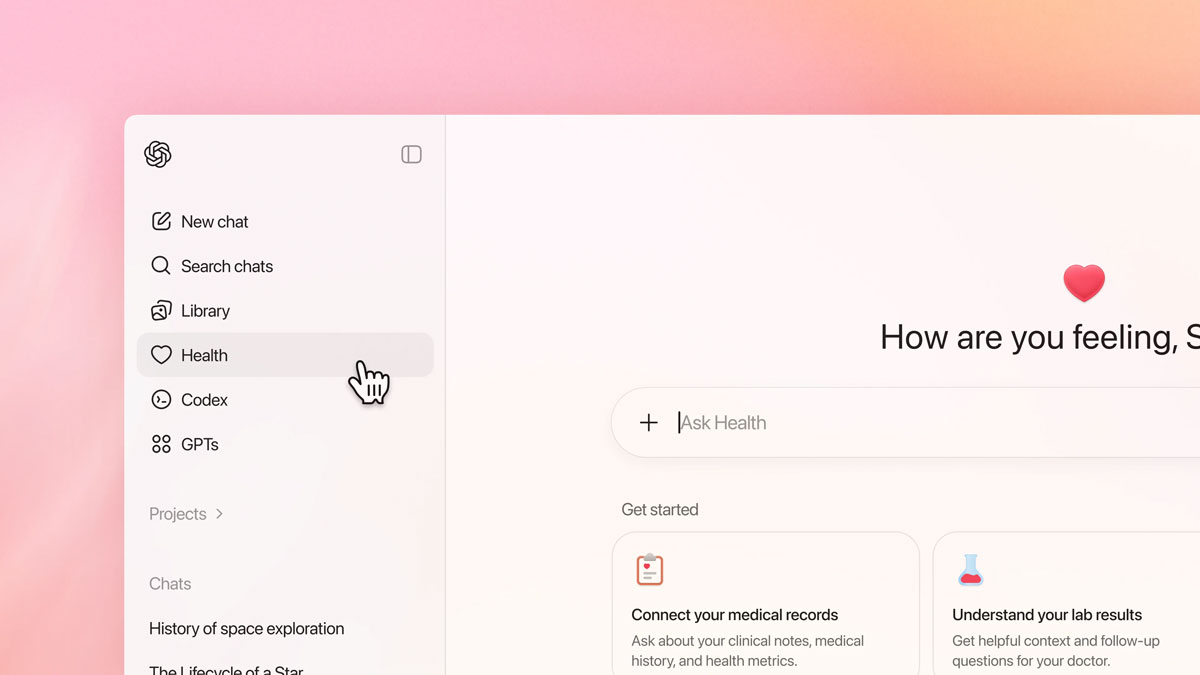

ChatGPT Health. OpenAI launched a dedicated health experience. It connects medical records and wellness apps like Apple Health, Function, and MyFitnessPal so responses can reference your actual test results and medications. The company says all health conversations live in a separate encrypted space and aren’t used to train models. Currently on a U.S. waitlist.

OpenAI is rolling out ChatGPT Health.

Amazon One Medical’s Health AI. Amazon took a different approach by building the assistant directly into the One Medical app, where your medical records already exist. It answers questions based on your history, explains lab results, and books same-day appointments when you need a real doctor. The integration feels less like adding AI to healthcare and more like AI working within it. HIPAA-compliant from the start.

Claude for Healthcare. Anthropic’s entry focuses on providers rather than patients. Anthropic built tools to speed up prior authorizations, check coverage requirements, and support clinical decisions. It’s less visible to consumers but addresses a real problem: the administrative delays that slow down care before it even reaches patients.

The gaps these tools target

After-hours access. Most healthcare happens between 9 and 5. When you have a health question at 11 PM, your options are limited: wait until morning, pay for urgent care, or ask the internet. These tools are designed to provide informed guidance when your doctor’s office is closed.

Fragmented information. Medical records are scattered across providers, portals, and apps. Test results arrive with no context. Discharge instructions use jargon most people don’t understand. AI health tools consolidate health information in one place and translate medical language into clear instructions.

Administrative delays. Prior authorizations, coverage checks, and paperwork slow down care. Anthropic’s tool targets this directly by automating the administrative tasks that create bottlenecks between patients and treatment.

The bottom line: AI wants to fix a broken system

AI health tools aren’t replacing doctors, but they are becoming part of how people navigate healthcare. The question isn’t whether to use them — most people already are. It’s whether the tools being built now can earn the trust that the stakes require.

Privacy protections, medical record integration, and built-in limits are a start for AI healthcare—though it’ll never replace critical care and diagnosis. Whether these features are enough will depend on how the tools perform in practice, not just how they’re marketed.

Published on Jan 30, 2026 by

Published on Jan 30, 2026 by